Scrapy爬虫实例讲解_校花网

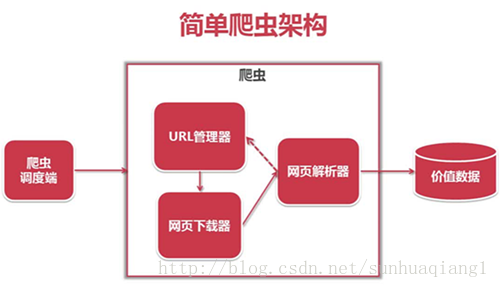

学习爬虫有一段时间了,今天使用Scrapy框架将校花网的图片爬取到本地。Scrapy爬虫框架相对于使用requests库进行网页的爬取,拥有更高的性能。

Scrapy官方定义:Scrapy是用于抓取网站并提取结构化数据的应用程序框架,可用于广泛的有用应用程序,如数据挖掘,信息处理或历史存档。

建立Scrapy爬虫工程

在安装好Scrapy框架后,直接使用命令行进行项目的创建:

E:\ScrapyDemo>scrapy startproject xiaohuar New Scrapy project 'xiaohuar', using template directory 'c:\\users\\lei\\appdata\\local\\programs\\python\\python35\\lib \\site-packages\\scrapy\\templates\\project', created in: E:\ScrapyDemo\xiaohuar You can start your first spider with: cd xiaohuar scrapy genspider example example.com

创建一个Scrapy爬虫

创建工程的时候,会自动创建一个与工程同名的目录,进入到目录中执行如下命令:

E:\ScrapyDemo\xiaohuar>scrapy genspider -t basic xiaohua xiaohuar.com Created spider 'xiaohua' using template 'basic' in module: xiaohuar.spiders.xiaohua命令中"xiaohua"

是生成Spider中*.py文件的文件名,"xiaohuar.com"是将要爬取网站的URL,可以在程序中更改。

编写Spider代码

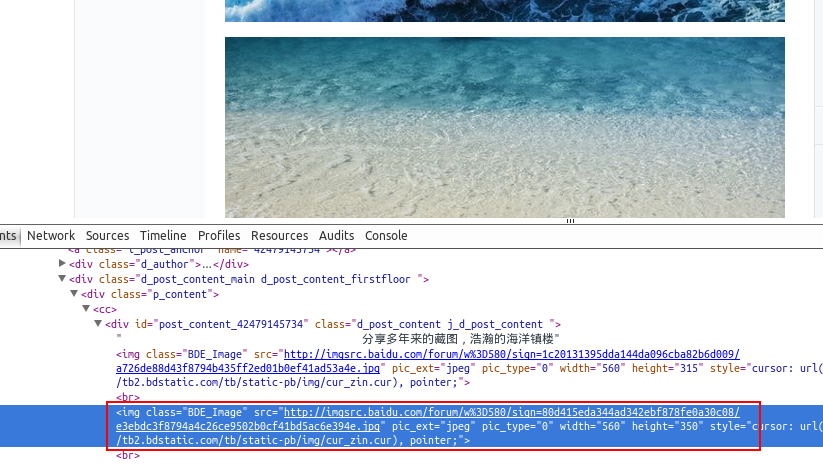

编写E:\ScrapyDemo\xiaohuar\xiaohuar\spiders中的xiaohua.py文件。主要是配置URL和对请求到的页面的解析方式。

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request

import re

class XiaohuaSpider(scrapy.Spider):

name = 'xiaohua'

allowed_domains = ['xiaohuar.com']

start_urls = []

for i in range(43):

url = "http://www.xiaohuar.com/list-1-%s.html" %i

start_urls.append(url)

def parse(self, response):

if "www.xiaohuar.com/list-1" in response.url:

# 下载的html源代码

html = response.text

# 网页中图片存储地址:src="/d/file/20160126/905e563421921adf9b6fb4408ec4e72f.jpg"

# 通过正则匹配到所有的图片

# 获取的是图片的相对路径的列表

img_urls = re.findall(r'/d/file/\d+/\w+\.jpg',html)

# 使用循环对图片页进行请求

for img_url in img_urls:

# 将图片的URL补全

if "http://" not in img_url:

img_url = "http://www.xiaohuar.com%s" %img_url

# 回调,返回response

yield Request(img_url)

else:

# 下载图片

url = response.url

# 保存的图片文件名

title = re.findall(r'\w*.jpg',url)[0]

# 保存图片

with open('E:\\xiaohua_img\\%s' % title, 'wb') as f:

f.write(response.body)

这里使用正则表达式对图片的地址进行匹配,其他网页也都大同小异,需要根据具体的网页源代码进行分析。

运行爬虫

E:\ScrapyDemo\xiaohuar>scrapy crawl xiaohua

2017-10-22 22:30:11 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: xiaohuar)

2017-10-22 22:30:11 [scrapy.utils.log] INFO: Overridden settings: {'BOT_NAME': 'xiaohuar', 'SPIDER_MODULES': ['xiaohuar.

spiders'], 'ROBOTSTXT_OBEY': True, 'NEWSPIDER_MODULE': 'xiaohuar.spiders'}

2017-10-22 22:30:11 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.logstats.LogStats']

2017-10-22 22:30:12 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2017-10-22 22:30:12 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2017-10-22 22:30:12 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2017-10-22 22:30:12 [scrapy.core.engine] INFO: Spider opened

2017-10-22 22:30:12 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min

)

2017-10-22 22:30:12 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2017-10-22 22:30:12 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://www.xiaohuar.com/robots.txt> (referer: None)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://www.xiaohuar.com/list-1-0.html> (referer: None

)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/1ub3ar0pz3y.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/b3ulk5gjyuc.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/3ua4h2am4w1.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/wyzulc0peyo.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/bzllg4mzfhi.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/kjgk3wx23os.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/gy5iopomyg3.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/dtyytqdy1pn.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/bw1xnhukzl4.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/14ahqvlj3g4.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/fips2taxhwp.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/zcxofl51ebh.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/guod24eaxkh.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:14 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/o42vvsomv2j.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/gtuefx04sxo.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/v3qsj5ptrzo.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/cyvgcmu3ylk.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/ofionrz3mfi.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/3amqwefve5w.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/3e22qnfy1xn.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/goiolfs3beg.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] DEBUG: Crawled (200) <GET /zb_users/upload/202003/qhhl1lbrkaw.jpg> (referer: http://www.xiaohuar.com/list-1-0.html)

2017-10-22 22:30:15 [scrapy.core.engine] INFO: Closing spider (finished)

2017-10-22 22:30:15 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 8785,

'downloader/request_count': 24,

'downloader/request_method_count/GET': 24,

'downloader/response_bytes': 2278896,

'downloader/response_count': 24,

'downloader/response_status_count/200': 24,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2017, 10, 22, 14, 30, 15, 892287),

'log_count/DEBUG': 25,

'log_count/INFO': 7,

'request_depth_max': 1,

'response_received_count': 24,

'scheduler/dequeued': 23,

'scheduler/dequeued/memory': 23,

'scheduler/enqueued': 23,

'scheduler/enqueued/memory': 23,

'start_time': datetime.datetime(2017, 10, 22, 14, 30, 12, 698874)}

2017-10-22 22:30:15 [scrapy.core.engine] INFO: Spider closed (finished)

scrapy crawl xiaohua

图片保存

在图片保存过程中"\"需要进行转义。

>>> r = requests.get("/zb_users/upload/202003/lanpzbatmig.html")

>>> open("E:\xiaohua_img\01.jpg",'wb').write(r.content)

File "<stdin>", line 1

SyntaxError: (unicode error) 'unicodeescape' codec can't decode by

>>> open("E:\\xiaohua_img\1.jpg",'wb').write(r.content)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

OSError: [Errno 22] Invalid argument: 'E:\\xiaohua_img\x01.jpg'

>>> open("E:\\xiaohua_img\\1.jpg",'wb').write(r.content)

以上这篇Scrapy爬虫实例讲解_校花网就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持【听图阁-专注于Python设计】。