pytorch中的上采样以及各种反操作,求逆操作详解

import torch.nn.functional as F

import torch.nn as nn

F.upsample(input, size=None, scale_factor=None,mode='nearest', align_corners=None)

r"""Upsamples the input to either the given :attr:`size` or the given

:attr:`scale_factor`

The algorithm used for upsampling is determined by :attr:`mode`.

Currently temporal, spatial and volumetric upsampling are supported, i.e.

expected inputs are 3-D, 4-D or 5-D in shape.

The input dimensions are interpreted in the form:

`mini-batch x channels x [optional depth] x [optional height] x width`.

The modes available for upsampling are: `nearest`, `linear` (3D-only),

`bilinear` (4D-only), `trilinear` (5D-only)

Args:

input (Tensor): the input tensor

size (int or Tuple[int] or Tuple[int, int] or Tuple[int, int, int]):

output spatial size.

scale_factor (int): multiplier for spatial size. Has to be an integer.

mode (string): algorithm used for upsampling:

'nearest' | 'linear' | 'bilinear' | 'trilinear'. Default: 'nearest'

align_corners (bool, optional): if True, the corner pixels of the input

and output tensors are aligned, and thus preserving the values at

those pixels. This only has effect when :attr:`mode` is `linear`,

`bilinear`, or `trilinear`. Default: False

.. warning::

With ``align_corners = True``, the linearly interpolating modes

(`linear`, `bilinear`, and `trilinear`) don't proportionally align the

output and input pixels, and thus the output values can depend on the

input size. This was the default behavior for these modes up to version

0.3.1. Since then, the default behavior is ``align_corners = False``.

See :class:`~torch.nn.Upsample` for concrete examples on how this

affects the outputs.

"""

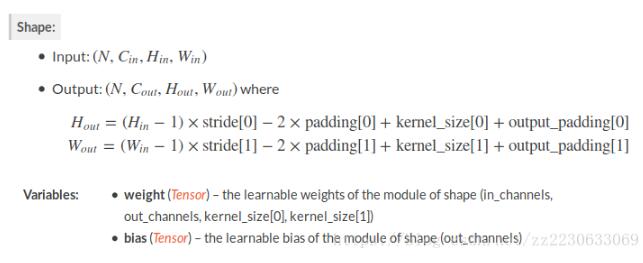

nn.ConvTranspose2d(in_channels, out_channels, kernel_size, stride=1, padding=0, output_padding=0, groups=1, bias=True, dilation=1)

""" Parameters: in_channels (int) – Number of channels in the input image out_channels (int) – Number of channels produced by the convolution kernel_size (int or tuple) – Size of the convolving kernel stride (int or tuple, optional) – Stride of the convolution. Default: 1 padding (int or tuple, optional) – kernel_size - 1 - padding zero-padding will be added to both sides of each dimension in the input. Default: 0 output_padding (int or tuple, optional) – Additional size added to one side of each dimension in the output shape. Default: 0 groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1 bias (bool, optional) – If True, adds a learnable bias to the output. Default: True dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1 """

计算方式:

定义:nn.MaxUnpool2d(kernel_size, stride=None, padding=0)

调用:

def forward(self, input, indices, output_size=None):

return F.max_unpool2d(input, indices, self.kernel_size, self.stride,

self.padding, output_size)

r"""Computes a partial inverse of :class:`MaxPool2d`.

:class:`MaxPool2d` is not fully invertible, since the non-maximal values are lost.

:class:`MaxUnpool2d` takes in as input the output of :class:`MaxPool2d`

including the indices of the maximal values and computes a partial inverse

in which all non-maximal values are set to zero.

.. note:: `MaxPool2d` can map several input sizes to the same output sizes.

Hence, the inversion process can get ambiguous.

To accommodate this, you can provide the needed output size

as an additional argument `output_size` in the forward call.

See the Inputs and Example below.

Args:

kernel_size (int or tuple): Size of the max pooling window.

stride (int or tuple): Stride of the max pooling window.

It is set to ``kernel_size`` by default.

padding (int or tuple): Padding that was added to the input

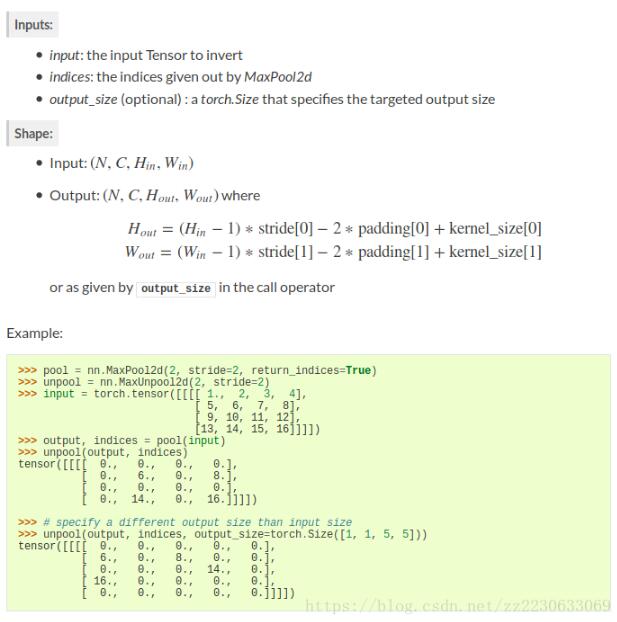

Inputs:

- `input`: the input Tensor to invert

- `indices`: the indices given out by `MaxPool2d`

- `output_size` (optional) : a `torch.Size` that specifies the targeted output size

Shape:

- Input: :math:`(N, C, H_{in}, W_{in})`

- Output: :math:`(N, C, H_{out}, W_{out})` where

计算公式:见下面

Example: 见下面

"""

F. max_unpool2d(input, indices, kernel_size, stride=None, padding=0, output_size=None)

见上面的用法一致!

def max_unpool2d(input, indices, kernel_size, stride=None, padding=0,

output_size=None):

r"""Computes a partial inverse of :class:`MaxPool2d`.

See :class:`~torch.nn.MaxUnpool2d` for details.

"""

pass

以上这篇pytorch中的上采样以及各种反操作,求逆操作详解就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持【听图阁-专注于Python设计】。